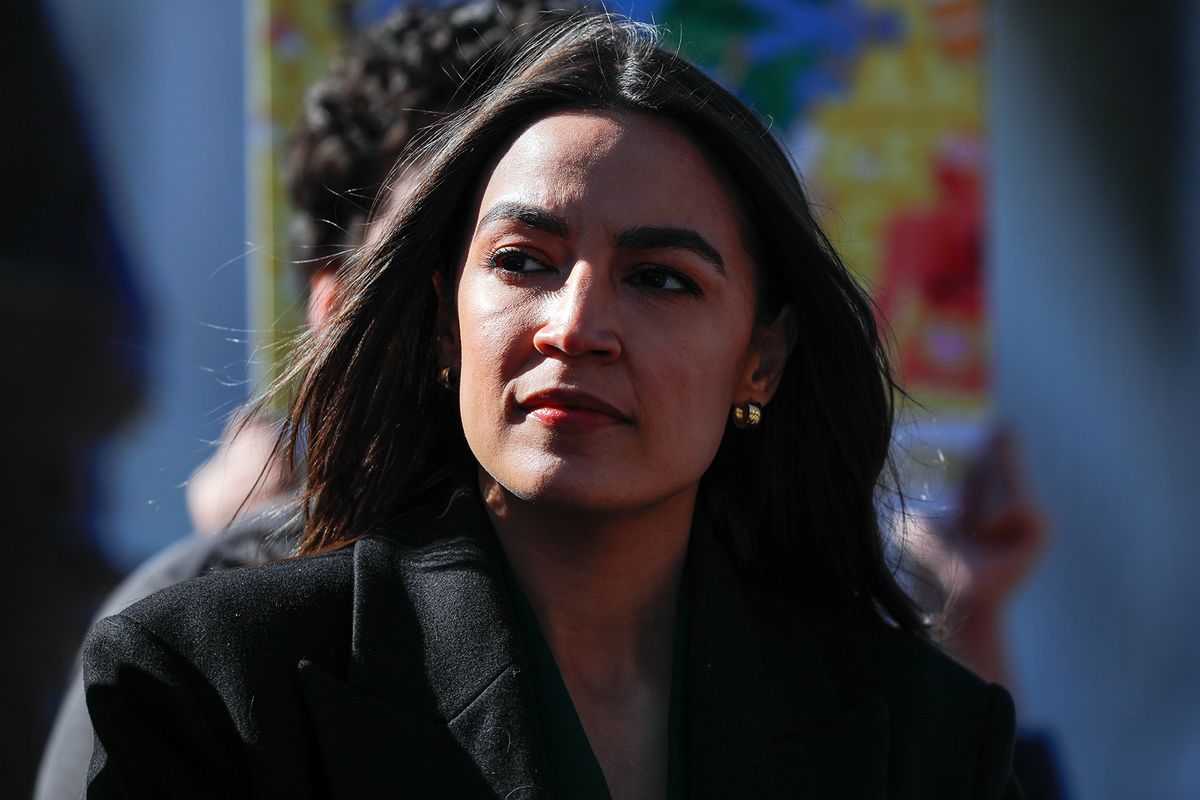

Rep. Alexandria Ocasio-Cortez (D-N.Y.) has been the subject of AI deepfake imagery for years, and she’s ready to fight against non-consensual, sexually-explicit, AI-generated imagery.

Ocasio-Cortez reveals to Rolling Stone that she will be leading the House companion of the Disrupt Explicit Forged Images and Non-Consensual Edits (DEFIANCE) Act of 2024 with a bipartisan group of representatives. The bill is her first move since being named to the House of Representatives’ bipartisan task force on AI, which was announced last month.

The legislation amends the Violence Against Women Act (VAWA) so that people can sue those who produce, distribute, or receive the deepfake pornography, if they “knew or recklessly disregarded” the victim did not consent to those images.

“How we answer these questions is going to shape how all of us live as a society, and individually the things that are going to happen to us or someone that we know, for decades,” Ocasio-Cortez tells Rolling Stone. She says there is an “urgency of the moment because folks have waited too long to set the groundwork for this,” so we need to contend with it and come to answers about how to regulate deepfake technology in a way to protect victims. “But there’s also the necessity to think deeply and take very seriously the conclusions and the actions that we come to.”

“How we answer these questions is going to shape how all of us live as a society.” — Alexandria Ocasio-Cortez

Ocasio-Cortez says when working on the bill, it was crucial to her and her team that they work intimately with abuse survivors. “It’s just a different way of legislating around this where you’re really centering the people that have been most affected by this,” she says.

More than 25 organizations have endorsed the bipartisan legislation, including the National Women’s Law Center, the Sexual Violence Prevention Association, the National Domestic Violence Hotline, and UltraViolet.

Ocasio-Cortez is co-leading the bill with Sens. Dick Durbin (D-Ill.) and Lindsey Graham (R-S.C.). The Senate introduced the DEFIANCE Act on Jan. 30, about a week after several AI-generated sexually-explicit deepfakes of Taylor Swift went viral on X. Today, the House is introducing a companion bill, which is a bill with similar or identical language that makes it so both chambers of Congress can consider the legislation simultaneously.

The bill defines “digital forgeries” as visual depictions “created through the use of software, machine learning, artificial intelligence or any other computer-generated or technological means to falsely appear to be authentic.” Any digital forgeries that depict the victims “in the nude or engaged in sexually-explicit conduct or sexual scenarios” would qualify. Victims would be able to sue “individuals who produced or possessed the forgery with intent to distribute it; or who produced, distributed, or received the forgery” if the individual knew the victim didn’t consent.

The rise of generative AI is making it easier than ever for the public to create realistic images. A 2019 study by cybersecurity company DeepTrace Labs, which builds tools to detect deepfakes, found that 96 percent of deepfake videos are non-consenual pornographic, all of which contained women. As UN Women reports, women who face multiple forms of discrimination, including Black and indigenous women and other women of color, LGBTQ people and women with disabilities are at heightened risk to experience technology-facilitated gender-based violence.

If the bill passes the House and Senate, it would become the first federal law to protect victims of deepfakes, providing a civil recourse for them.

That’s not to say there haven’t been previous efforts to curtail deepfakes, although so far no action has been taken on the past bills targeting them. Rep. Yvette Clarke (D-N.Y.) introduced a DeepFakes Accountability Act in June 2019 and again in September 2023, in an attempt to establish criminal penalties and provide legal recourse to deepfake victims. In May 2023, Rep. Joe Morelle (D-N.Y.) introduced the Preventing Deepfakes of Intimate Images Act, which would have criminalized the sharing of non-consensual and sexually-explicit deepfakes.

Despite these previous efforts, current federal law does not provide any protections for the specific harms victims of deepfakes face.

“We’ve been working on this legislation before I even knew that I was going to be named to the bipartisan AI task force,” says Ocasio-Cortez. “It’s a really, really big deal.”

Jean Jacket: Repull/Jewelry: Personal collection

Jean Jacket: Repull/Jewelry: Personal collection Hat: Xtinel/Dress shirt and vest: Raphael Viens/Jewelry: Personal Collection & So Stylé

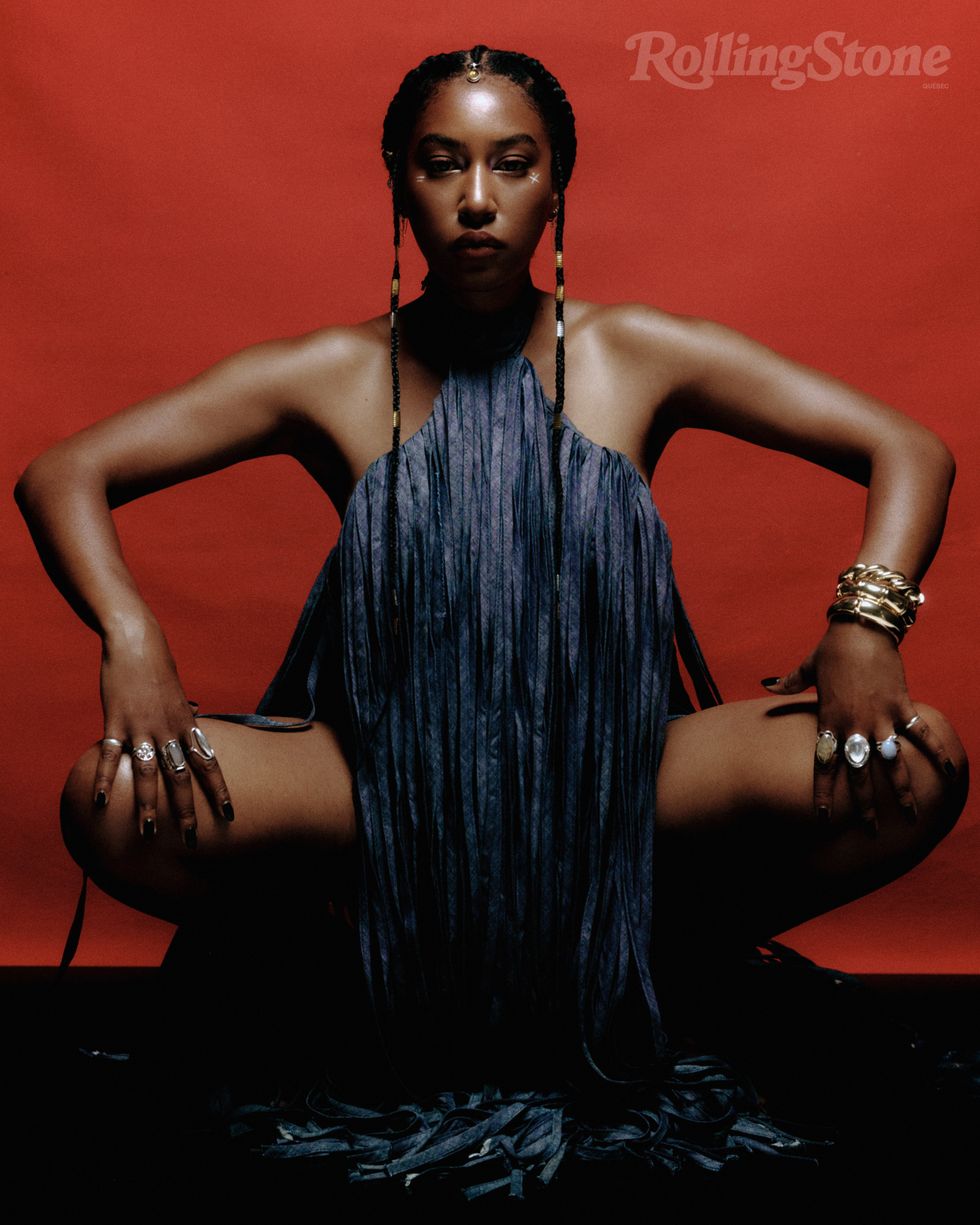

Hat: Xtinel/Dress shirt and vest: Raphael Viens/Jewelry: Personal Collection & So Stylé  Dress: Helmer/Jewelry: Personal Collection

Dress: Helmer/Jewelry: Personal Collection  Jewelry: Personal Collection

Jewelry: Personal Collection  Dress: Helmer/Jewelry: Personal Collection

Dress: Helmer/Jewelry: Personal Collection

Catering Presented By The Food DudesPhoto by Snapdrg0n

Catering Presented By The Food DudesPhoto by Snapdrg0n Catering Presented By The Food DudesPhoto by Snapdrg0n

Catering Presented By The Food DudesPhoto by Snapdrg0n Catering Presented By The Food DudesPhoto by Snapdrg0n

Catering Presented By The Food DudesPhoto by Snapdrg0n

Photographer: Raphaëlle Sohier / Executive production: Elizabeth Crisante & Amanda Dorenberg / Design: Alex Filipas / Post-production: Bryan Egan/ Headpiece: Tristan Réhel

Photographer: Raphaëlle Sohier / Executive production: Elizabeth Crisante & Amanda Dorenberg / Design: Alex Filipas / Post-production: Bryan Egan/ Headpiece: Tristan Réhel Photo: Raphaëlle Sohier

Photo: Raphaëlle Sohier Photo: Raphaëlle Sohier/ Photo production: Bryan Egan/ Blazer:

Photo: Raphaëlle Sohier/ Photo production: Bryan Egan/ Blazer:  Photo: Raphaëlle Sohier/ Blazer: Vivienne Westwood/ Skirt :

Photo: Raphaëlle Sohier/ Blazer: Vivienne Westwood/ Skirt :